Statler

State-maintaining language models for embodied reasoning.

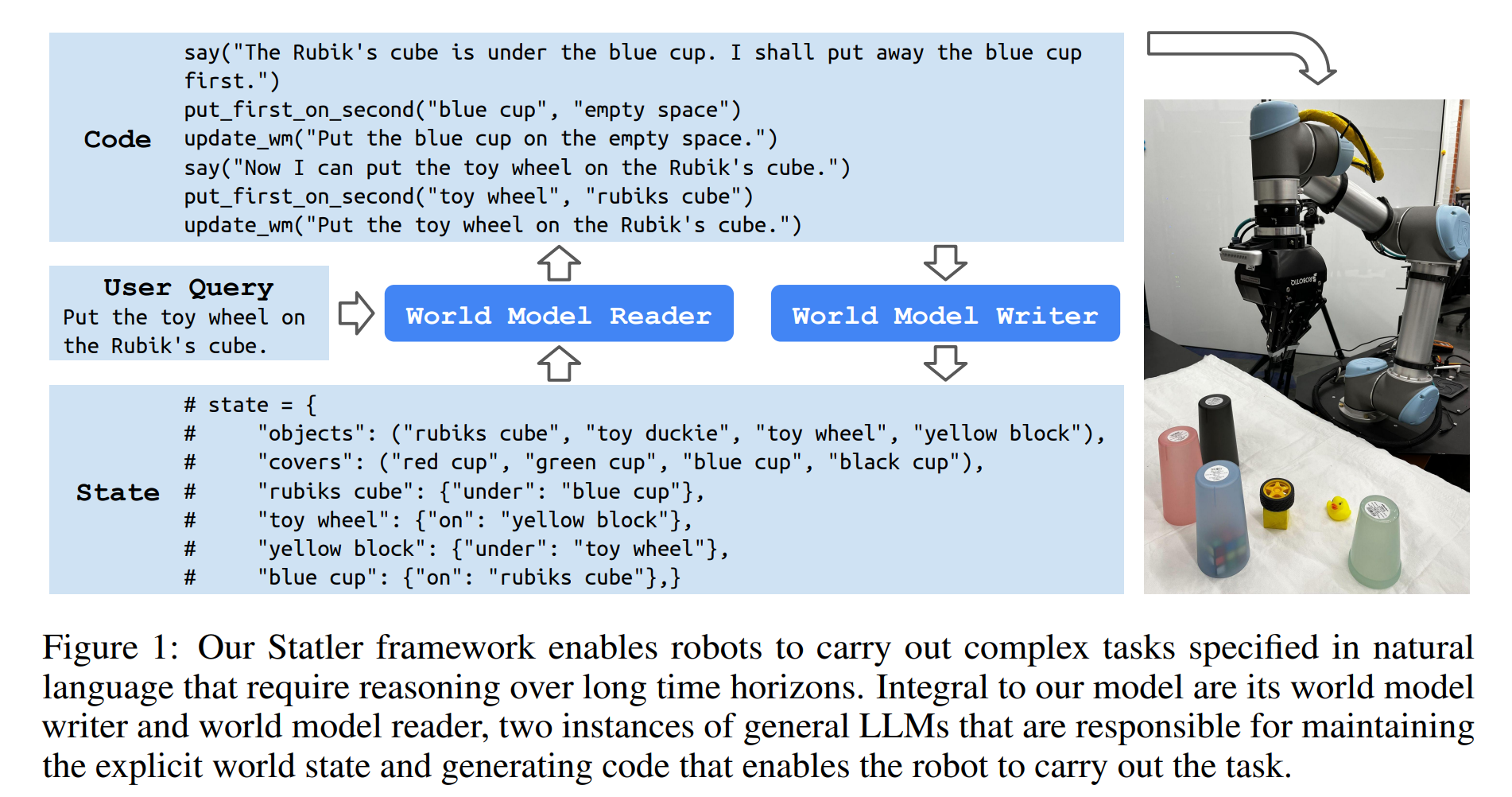

With other members of RIPL at TTIC, we developed Statler based on the existing work of Code as Policies. The gist of Statler is a state-maintaining language model, where an explicit representation of the world state is maintained over time and intended for long-horizon operations. The paper is accepted to ICRA 2024.

- Upon receiving the user’s query,

Statlerfeeds it to theworld_model_reader, which extends it with the current world state and uses it as the prompt to elicit code generation from a large language model (LLM). Specifically, GPT 3.5 is used. - The generated code not only enables the robot to perform the action in response, but also calls the

world_model_writerwith the necessary information to update the world state. -

world_model_readerandworld_model_writerare both instances of general-purpose GPT-driven LLMs separatedly tuned via few-shot prompting. We found that splitting the reasoning burden into different LLMs results in improved accuracy and stability for long-horizon operations.